Hi, I have done some more testing. Here there are my notes.

HSDS Testing

The purpose of this test is to evaluate the read speed of the HSDS service installed locally (POSIX system). Different read methods are compared (h5yd, Postman, Curl). In addition, some configuration options of the HSDS service are also analyzed.

To have a reference base line, the results with a local HDF5 file are also presented.

Test Preparation

A clean HSDS service is created:

-

The server is a clean Ubuntu 20.04.02 LTS machine (8 CPU, 8 GB of RAM, fast SSD disk).

-

HSDS version:

- master branch.

- version 0.7.0beta.

- commit:

1c0af45b1f38fec327be54acc963ee28025711aa :: (date: 2021-07-08)

-

Install instructions (POSIX Install):

https://github.com/HDFGroup/hsds/blob/master/docs/docker_install_posix.md

-

The post install instructions are also followed:

https://github.com/HDFGroup/hsds/blob/master/docs/post_install.md

-

The override.yml file is created with the following content:

max_request_size: 500m #MB - should be no smaller than client_max_body_size in nginx tmpl

-

The HSDS service is launched with 6 data nodes: $ ./runall.sh 6

Test Data

Random test data is uploaded to the HSDS service and it is also created as a local HDF5 file.

The example data is a 4.000.000x10 dataset (random float64 data), resizable with unlimited number of rows and chuncking enabled.

This data is created with the first part of the following Python script:

import numpy as np

import time

import h5py

import h5pyd

HDF5_PATH = "/home/bruce/00-DATA/HDF5/" # My local path

HSDS_PATH = "/home/test_user1/" # Domain in HSDS server

FILE_NAME = "testFile_fromPython.h5"

NUM_ROWS = 4000000

NUM_COLS = 10

CHUNK_SIZE = [4000, NUM_COLS]

DATASET_NAME = "myDataset"

# ********** CREATE FILE: Write in chunks *********************

randomData = np.random.rand(NUM_ROWS, NUM_COLS)

# Local HDF5

fHDF5 = h5py.File(HDF5_PATH + FILE_NAME, "w")

dset_hdf5 = fHDF5.create_dataset(DATASET_NAME, (NUM_ROWS,NUM_COLS), dtype='float64', maxshape=(None,NUM_COLS), chunks=(CHUNK_SIZE[0], CHUNK_SIZE[1]))

for iRow in range(0, NUM_ROWS, CHUNK_SIZE[0]):

dset_hdf5[iRow:iRow+CHUNK_SIZE[0]-1, :] = randomData[iRow:iRow+CHUNK_SIZE[0]-1, :]

print("HDF5 file created.")

fHDF5.close()

# HSDS

fHSDS = h5pyd.File(HSDS_PATH + FILE_NAME, "w")

dset_hsds = fHSDS.create_dataset(DATASET_NAME, (NUM_ROWS,NUM_COLS), dtype='float64', maxshape=(None,NUM_COLS), chunks=(CHUNK_SIZE[0], CHUNK_SIZE[1]))

for iRow in range(0, NUM_ROWS, CHUNK_SIZE[0]):

dset_hsds[iRow:iRow+CHUNK_SIZE[0]-1, :] = randomData[iRow:iRow+CHUNK_SIZE[0]-1, :]

print("HSDS loaded with new data.")

fHSDS.close()

# ***** TEST FUNCTION *****************************************

def test_system(p_file, p_type):

if p_type == "HDF5":

print("Testing HDF5 local file...")

f = h5py.File(p_file, "r")

elif p_type == "HSDS":

print("Testing HSDS...")

f = h5pyd.File(p_file, "r")

else:

raise NameError('Only HDF5 and HSDS options allowed.')

dset = f[DATASET_NAME]

tStart = time.time()

myChunk01 = dset[32000:35000,6]

tElapsed = time.time() - tStart

print("Elapsed time :: myChunk01 = dset[32000:35000,6] :: ", format(tElapsed, ".4f"), 'seconds.')

tStart = time.time()

myChunk02 = dset[0:800000,...]

tElapsed = time.time() - tStart

print("Elapsed time :: myChunk02 = dset[0:800000,...] :: ", format(tElapsed, ".4f"), 'seconds.')

tStart = time.time()

allData = dset[0:NUM_ROWS-1,:]

tElapsed = time.time() - tStart

print("Elapsed time :: allData = dset[0:NUM_ROWS-1,:] :: ", format(tElapsed, ".4f"), 'seconds.')

f.close()

print("Test ended.")

# ********** TEST: READ FILE **********************************

test_system(HDF5_PATH + FILE_NAME, "HDF5")

test_system(HSDS_PATH + FILE_NAME, "HSDS")

Test Protocol

Base Reference: HDF5

The HDF5 file is read directly (h5py library) as the base reference of the results.

The test is performed with the script shown in section Test Data (test_system function).

HSDS Testing

The test data contained in HSDS is read with the following methods:

-

H5pyd library.

-

POSTMAN program

-

CURL program.

The HSDS is configured:

- With

http_compression set to TRUE or FALSE in the override.yml file.

Next, the configuration conditions of each read method are described.

H5pyd

The data is read with the h5pyd Python client library. Version 0.8.4.

The test is performed with the test_system function shown in the Python script of section <1.2 Test Data>

Postman

The test data is read with HTTP GET requests performed with the POSTMAN program. The requests follow the HSDS API REST documentation. Concretely, the Get Value request.

Firstly, the UUID of the dataset is obtained with the following request:

GET http://localhost:5101/datasets?domain=/home/test_user1/testFile_fromPython.h5

The response is:

"datasets": ["d-11d22dd0-950bbd8c-bcf7-f7efb4-749aaf"]

Next, the segments of the dataset are obtained with GET requests. These requests are performed with the following headers:

The performed HTTP GET requests are the following:

GET http://localhost:5101/datasets/d-11d22dd0-950bbd8c-bcf7-f7efb4-749aaf/value?domain=/home/test_user1/testFile_fromPython.h5&select=[32000:35000,6]

GET http://localhost:5101/datasets/d-11d22dd0-950bbd8c-bcf7-f7efb4-749aaf/value?domain=/home/test_user1/testFile_fromPython.h5&select=[0:800000,6]

GET http://localhost:5101/datasets/d-11d22dd0-950bbd8c-bcf7-f7efb4-749aaf/value?domain=/home/test_user1/testFile_fromPython.h5&select=[0:3999999,0:9]

Curl

The test data is read with HTTP GET requests performed with the CURL command line program. They are the same requests as the POSTMAN case. The data is written to a file. The elapsed times are measured and presented with the -w option of the program (doc).

The commands are the following:

curl -g --request GET 'http://localhost:5101/datasets/d-11d22dd0-950bbd8c-bcf7-f7efb4-749aaf/value?domain=/home/test_user1/testFile_fromPython.h5&select=[32000:35000,6]' --header 'Accept: application/octet-stream' --header 'Authorization: Basic dGVzdF91c2VyMTp0ZXN0' -o ~/00-DATA/tmp/testDownloadedData01.txt -w "@curlFormat.txt"

curl -g --request GET 'http://localhost:5101/datasets/d-11d22dd0-950bbd8c-bcf7-f7efb4-749aaf/value?domain=/home/test_user1/testFile_fromPython.h5&select=[0:80000,6]' --header 'Accept: application/octet-stream' --header 'Authorization: Basic dGVzdF91c2VyMTp0ZXN0' -o ~/00-DATA/tmp/testDownloadedData02.txt -w "@curlFormat.txt"

curl -g --request GET 'http://localhost:5101/datasets/d-11d22dd0-950bbd8c-bcf7-f7efb4-749aaf/value?domain=/home/test_user1/testFile_fromPython.h5&select=[0:3999999,0:9]' --header 'Accept: application/octet-stream' --header 'Authorization: Basic dGVzdF91c2VyMTp0ZXN0' -o ~/00-DATA/tmp/testDownloadedData03.txt -w "@curlFormat.txt"

The curlFormat.txt is the following:

\n

http_version: %{http_version}\n

response_code: %{response_code}\n

size_download: %{size_download} bytes\n

speed_download: %{speed_download} bytes/s\n

time_namelookup: %{time_namelookup} s\n

time_connect: %{time_connect} s\n

time_appconnect: %{time_appconnect} s\n

time_pretransfer: %{time_pretransfer} s\n

time_redirect: %{time_redirect} s\n

time_starttransfer: %{time_starttransfer} s\n

----------\n

time_total: %{time_total} s\n

Test Results

Local HDF5 file

Elapsed time :: myChunk01 = dset[32000:35000,6] :: 0.0005 seconds.

Elapsed time :: myChunk02 = dset[0:800000,...] :: 0.0370 seconds.

Elapsed time :: allData = dset[0:NUM_ROWS-1,:] :: 0.1840 seconds.

H5pyd

-

When http_compression is true

Elapsed time :: myChunk01 = dset[32000:35000,6] :: 0.0082 seconds.

Elapsed time :: myChunk02 = dset[0:800000,...] :: 3.9666 seconds.

Elapsed time :: allData = dset[0:NUM_ROWS-1,:] :: 20.6691 seconds.

-

When http_compression is false

Elapsed time :: myChunk01 = dset[32000:35000,6] :: 0.0063 seconds.

Elapsed time :: myChunk02 = dset[0:800000,...] :: 0.4718 seconds.

Elapsed time :: allData = dset[0:NUM_ROWS-1,:] :: 3.6059 seconds.

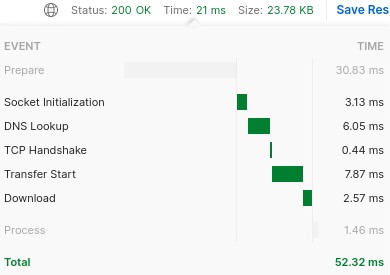

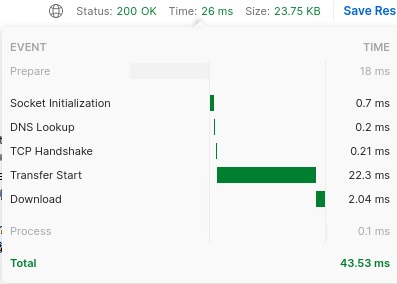

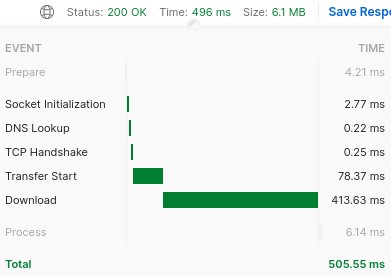

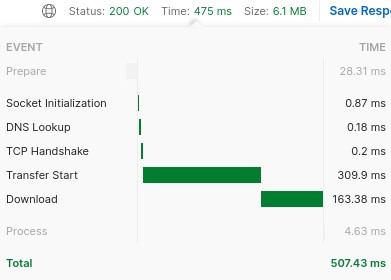

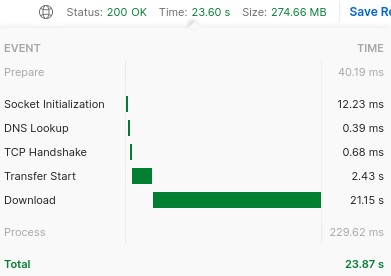

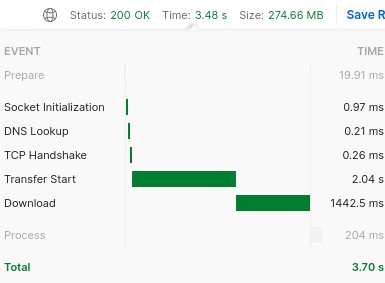

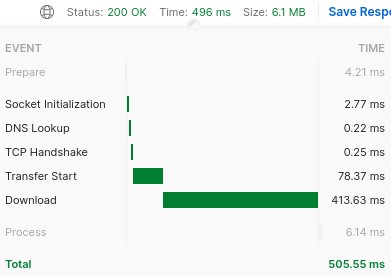

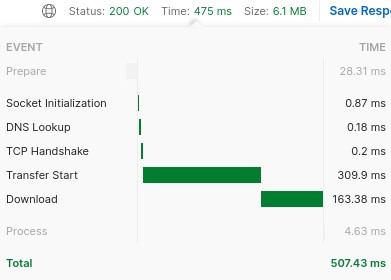

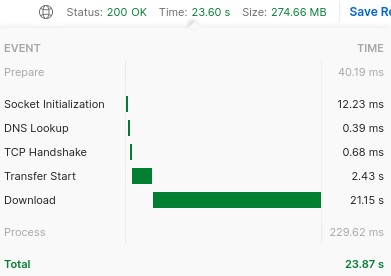

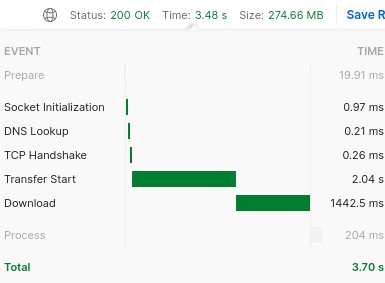

POSTMAN

| select |

http_compression: true |

http_compression: false |

| [32000:35000,6] |

|

|

| [0:800000,6] |

|

|

| [0:3999999,0:9] |

|

|

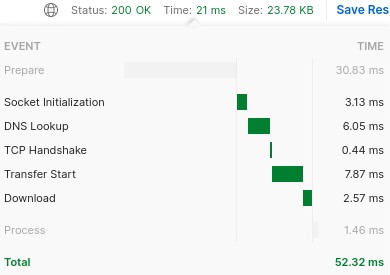

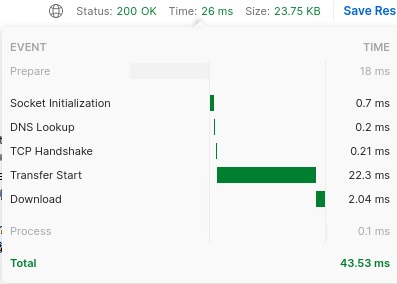

CURL

| select |

http_compression: true |

http_compression: false |

| [32000:35000,6] |

|

|

| [0:800000,6] |

|

|

| [0:3999999,0:9] |

|

|

Regarding the results shown.

-

time_starttransfer: The time, in seconds, it took from the start until the first byte was just about to be transferred. This includes time_pretransfer and also the time the server needed to calculate the result.

-

The equivalent Postman’s Download time is: time_total - time_starttransfer.

-

The real download speed is size_download / (time_total - time_starttransfer)

-

the -w option of the Curl program: doc

For example, in the case of:

-

http_compression: true. The real download speeds are: 16 MB/s, 213 MB/s, 156 MB/s.

-

http_compression: false. The real download speeds are: 69 MB/s, 36 MB/s, 150 MB/s.

Discussion

It is important to note that the obtained results are no constant. There are slightly differences if the tests are repeated. However, the following facts can be discussed:

-

Accessing local data through local HSDS is much slower than using local HDF5. The fastest HSDS read method (CURL) requires around 3.3 seconds to extract the full dataset, whereas accessing the HDF5 data directly requires 0.2 seconds.

-

H5pyd and Postman show a high speed penalty if http_compression: true. If it set to false the full dataset read operation requires around 3.6 seconds. However, if http_compression: true, the elapsed time is around 20 seconds.

-

When using CURL, there is no speed penalty if http_compression: true. Note: the obtained binary data in the output file is the same (verified).