A Simple Vector Database in HDF5 - Gerd Heber on Call the Doctor 12/16/25

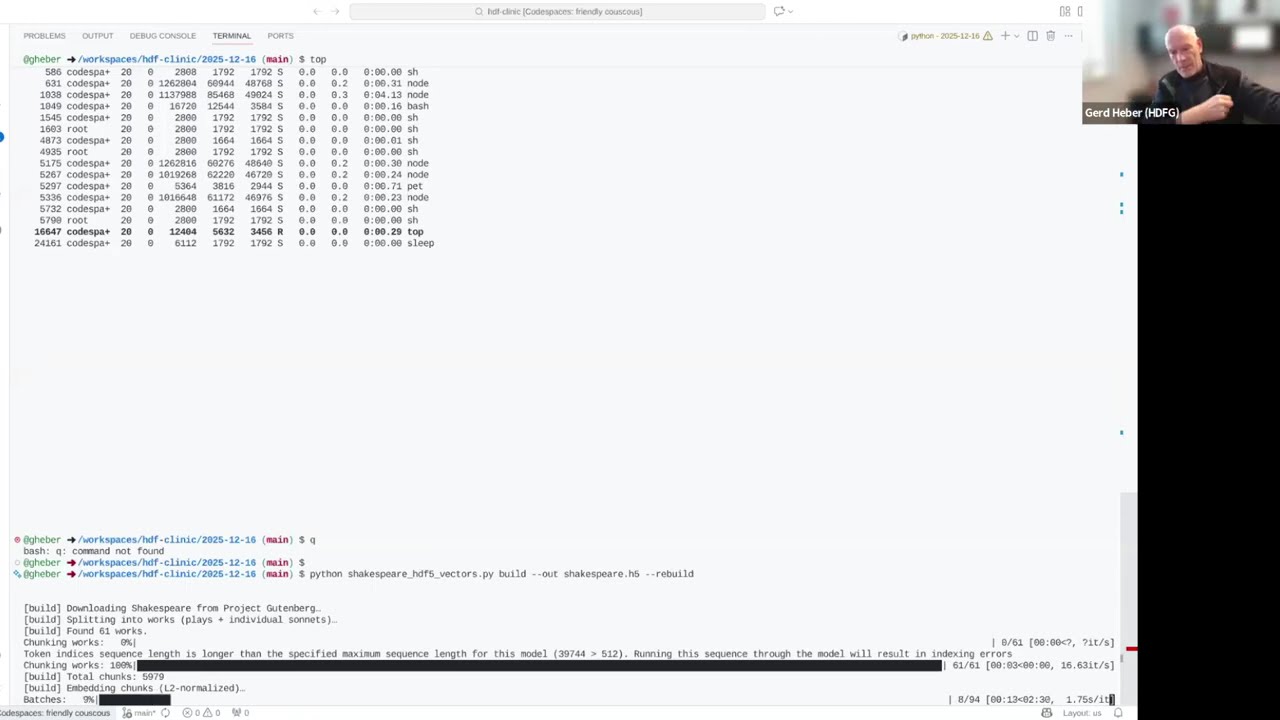

In this 20‑minute HDF Clinic hosted by our very own Gerd Heber, we’ll build a minimal vector database stored entirely in a single HDF5 file: vectors (embeddings) + metadata + similarity search. Using a short Python script, we’ll download the Project Gutenberg Shakespeare corpus, split it into works (and individual sonnets), chunk text by tokens (256 tokens with 32‑token overlap), and generate unit‑normalized embeddings using a SentenceTransformers model. We’ll write the results to an HDF5 layout with compressed datasets for text chunks, work IDs, and embeddings, plus a compact “works index” table for metadata filtering. Finally, we’ll run a few live queries using cosine similarity (implemented as a dot product over normalized vectors, computed in blocks) and discuss practical upgrades, such as richer metadata, better chunking, and adding an approximate nearest neighbor (ANN) index for speed at scale.

To join, just jump on the zoom:

Launch Meeting - Zoom

December 16, 2025,12:20 p.m. central time US/Canada