I had resolved the problem some time back. The performance problem was in the 'attributes.'

The several kilobytes floating point datasets were being written in a few microseconds. However, each dataset had associated with it a modest number of character 'attributes' such as heading and GPS location. These several hundred byte data attributes were taking 10's or 100's of Milliseconds to write. The solution was to scrap the 'attributes' and simply insert byte datasets containing the pertinent metadata after each measurement floating point dataset.

···

________________________________

From: Hdf-forum <hdf-forum-bounces@lists.hdfgroup.org> on behalf of Jason Newton <nevion@gmail.com>

Sent: Monday, May 9, 2016 3:52 PM

To: HDF Users Discussion List

Subject: Re: [Hdf-forum] Performance problem writing small datasets hdf5.8.0.2

Karl,

What options are you creating your datasets with? When you say writing the smaller HDF5 files this implies some filters are being used (like gzip). That would be a nono for real-time expectations. It would be useful if you could post your code or a filtered down version in case. But your very first experiment should be to try against 1.8.16 and maybe see if H5Pset_libver_bounds with H5F_LIBVER_LATEST for the low and high arguments after. If you are using 1.8.02, you're using a copy from 2009 and missing many bugfixes.

I would append to one dataset generally, this is actually what the packet table does, but it also keeps the H5TB table it's running on open vs H5TBappend_records. But as I said, for 6 hz to maybe 100hz... it should work, but I don't know how much jitter. Btw tmpfs = ram... it is very useful to look against it to see if this is a result of fsync's or something.

-Jason

On Mon, May 9, 2016 at 4:42 PM, Karl Hoover <mugwortmoxa@hotmail.com<mailto:mugwortmoxa@hotmail.com>> wrote:

Greetings,

Jason, thanks very much for the suggestions. I have tried different hard disk hardware, different computers, different file system types and turning off journaling. The result has always thus far been that the raw binary files can be written at full speed while writing the smaller HDF5 files introduces the constantly growing delay.

It does seem that my approach of making each measurement a new HDF dataset might not be very good. But it is convenient and my whole software 'system' expects the data to be laid out that way.

Best regards,

Karl Hoover

________________________________

From: Hdf-forum <hdf-forum-bounces@lists.hdfgroup.org<mailto:hdf-forum-bounces@lists.hdfgroup.org>> on behalf of Jason Newton <nevion@gmail.com<mailto:nevion@gmail.com>>

Sent: Monday, May 9, 2016 1:24 PM

To: HDF Users Discussion List

Subject: Re: [Hdf-forum] Performance problem writing small datasets hdf5.8.0.2

Hi,

I've used HDF5 in soft-real time before where I mostly met timings you'd seem to agree with with more data, to SSD, but I used the packet table interface (C/C++, no Java). So it is possible, though I will also tell you I used posix file io to get more safety/perf/determinism, then post convert it (after DAQ is complete) to HDF5 for analysis/long term storage. For your use case though, it sounds fine.

You might want to test with a tmpfs partition like /dev/shm/ or /tmp, assuming they are mounted as tmpfs to separate out hardware and filesystem performances.

I think you mean HDF5 1.8.02 (not really sure), but it should be stated you should test and USE the latest stable HDF5. It is very possible this will change your observations greatly (in a positive fashion, usually). You'd definitely want to keep files and datasets open while you append to them as closes imply flushes.

Also, if this example file is indicative of your typical collections, creating datasets very frequently is high overhead. I'm pretty sure you could do 10-100hz without much trouble on most linux filesystems (ext4, xfs), with occasional jitter if under high load.

This constant growth in timing sounds very familiar to a bug I came across a few years ago, but I can't quite remember the cause of it. I do remember it was fixed though. I'll follow up if I recall more.

-Jason

On Mon, May 9, 2016 at 1:58 PM, Karl Hoover <mugwortmoxa@hotmail.com<mailto:mugwortmoxa@hotmail.com>> wrote:

We're developing software for the control of a scientific instrument. At an overall rate of bout 6Hz, 2.8 millisecond's worth of 18 bit samples at 250 kHz on up to 24 channels. These data are shipped back over gigabit Ethernet to a Linux PC running a simple Java program. These data can reliably be written as a byte stream to disk at full speed with extremely regular timing. Thus we are certain that our data acquisition, Ethernet transport, Linux PC software and file system are working fine.

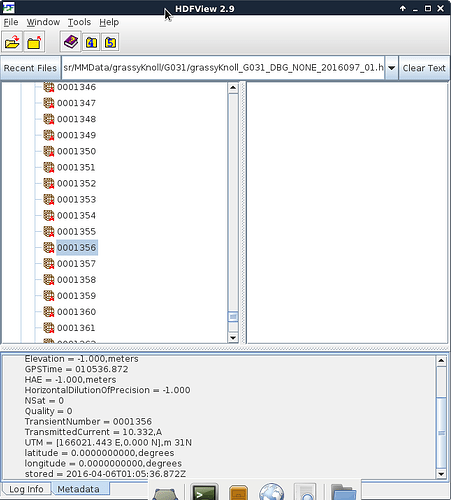

However, the users want data in a more portable, summarized format and we selected hdf5. The 700 or so 18 bit samples of each channel are integrated into 18 to 20 time bins. The resulting data sets are thus not very large at all. I've attached a screen shot of a region of a typical file of typical size and example data (much smaller than a typical file.)

The instrument operates in two distinct modes. In one mode the instrument is stationary over the region of interest. This is working flawlessly. In the other mode, the instrument is moved around and about in arbitrary paths. In this mode the precise time of the data acquisition obviously is critical. What we observe is that the performance of the system is fine very stable at 6Hz except that every 9 seconds a delay occurs starting with about a 10 ms delays growing without bound to 100's of milliseconds. There is nothing in my software that knows anything about a 9 second interval. And I've found that this delay only occurs when I write the HDF5 file. All other processing including creating the HDF5 file can be performed without any performance problem. It makes no difference whether I keep the hdf5 file open or close it each time. I'm using HDF5.8.02 and the jni / Java library. Any suggestions about how to fix this problem would be appreciated.

Best regards,

Karl Hoover

Senior Sofware Engineer

Geometrics

_______________________________________________

Hdf-forum is for HDF software users discussion.

Hdf-forum@lists.hdfgroup.org<mailto:Hdf-forum@lists.hdfgroup.org>

http://lists.hdfgroup.org/mailman/listinfo/hdf-forum_lists.hdfgroup.org

Twitter: https://twitter.com/hdf5

_______________________________________________

Hdf-forum is for HDF software users discussion.

Hdf-forum@lists.hdfgroup.org<mailto:Hdf-forum@lists.hdfgroup.org>

http://lists.hdfgroup.org/mailman/listinfo/hdf-forum_lists.hdfgroup.org

Twitter: https://twitter.com/hdf5